I just finished setting up a configuration where a separate Maven project is used to run some

JWebUnit tests on a web application. The focus is on regularly checking the application functionality, so we don't care about running on the production stack (yet), we rather want an easy to run and quick test suite.

We assume that the application we want to run defaults to some standalone setup, e.g. by using something like an in-memory instance of

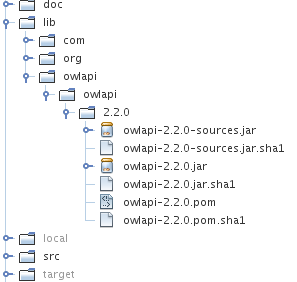

hsqldb as persistence solution. It also has to be available via Maven, in the simple case by running a "mvn install" locally, the nicer solution involves running your own repository (e.g. using

Artifactory).

Here is a sample POM for such a project he rest of the story is in the inline documentation:

<!--

=== POM for testing a war file coming out of a separate build. ===

Basic idea:

- run Surefire plugin for tests, but in the integration-test phase

- use Cargo to start an embedded Servlet engine before the integration-tests, shut it down afterwards

- use tests written with JWebUnit with the HtmlUnit backend to do the actual testing work

This configuration can be run with "mvn integration-test".

-->

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>my.org</groupId>

<artifactId>myApp-test</artifactId>

<name>MyApp Test Suite</name>

<version>1.0.0-SNAPSHOT</version>

<description>A set of functional tests for my web application</description>

<build>

<plugins>

<plugin>

<!-- The Cargo plugin manages the Servlet engine -->

<groupId>org.codehaus.cargo</groupId>

<artifactId>cargo-maven2-plugin</artifactId>

<executions>

<!-- start engine before tests -->

<execution>

<id>start-container</id>

<phase>pre-integration-test</phase>

<goals>

<goal>start</goal>

</goals>

</execution>

<!-- stop engine after tests -->

<execution>

<id>stop-container</id>

<phase>post-integration-test</phase>

<goals>

<goal>stop</goal>

</goals>

</execution>

</executions>

<configuration>

<!-- we use a Jetty 6 -->

<container>

<containerId>jetty6x</containerId>

<type>embedded</type>

</container>

<!-- don't let Jetty ask for the Ctrl-C to stop -->

<wait>false</wait>

<!-- the actual configuration for the webapp -->

<configuration>

<!-- pick some port likely to be free, it has be matched in the test definitions -->

<properties>

<cargo.servlet.port>9635</cargo.servlet.port>

</properties>

<!-- what to deploy and how (grabbed from dependencies below) -->

<deployables>

<deployable>

<groupId>my.org</groupId>

<artifactId>my-webapp</artifactId>

<type>war</type>

<properties>

<context>/</context>

</properties>

</deployable>

</deployables>

</configuration>

</configuration>

</plugin>

<plugin>

<!-- configure the Surefire plugin to run integration tests instead of the

running in the normal test phase of the lifecycle -->

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<configuration>

<skip>true</skip>

</configuration>

<executions>

<execution>

<phase>integration-test</phase>

<goals>

<goal>test</goal>

</goals>

<configuration>

<skip>false</skip>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

<dependencies>

<!-- we use the HTML unit variant of JWebUnit for testing -->

<dependency>

<groupId>net.sourceforge.jwebunit</groupId>

<artifactId>jwebunit-htmlunit-plugin</artifactId>

<version>2.2</version>

<scope>test</scope>

<exclusions>

<exclusion>

<groupId>javax.servlet</groupId>

<artifactId>servlet-api</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- we depend on our own app, so the deployment setup above can find it -->

<dependency>

<groupId>my.org</groupId>

<artifactId>my-webapp</artifactId>

<version>1.0-SNAPSHOT</version>

<type>war</type>

</dependency>

</dependencies>

</project>

Note that in this case we depend on a SNAPSHOT release of our own app. This means that you can nicely run this test in your continuous integration server, triggered by the successful build of your main application (as well as any change in the tests itself). If you use my favorite CI server

Hudson, then you can even tell it to aggregate the test results onto the main project.

Having a POM like this you can launch straight into the

JWebUnit Quickstart. A basic setup needs only the POM and one test case in

src/test/java. Of course you can use a different testing framework if you want to.

You can also switch the Servlet engine or deploy into a running one. The

Cargo documentation is pretty decent, so I recommend looking at that. They certainly know more Servlet engines than I do.

If you use profiles to tweak Cargo in the right way, you should be able to run the same test suite against a proper test system using the same stack as your production environment. I haven't gone there yet, but I intend to. The setup used here is really intended for regular tests after each commit. By triggering them automatically on the build server the delay doesn't burden the developers, but they are still fast enough to give you confidence in what you are doing.